YouTube-style video platform on GCP: users upload via signed URLs, Pub/Sub triggers a Cloud Run FFmpeg service (360p transcode + thumbnails), metadata in Firestore, videos served with short-lived signed URLs through a Next.js web client.

I wanted a "YouTube-style" experience for anime clips where users can upload large videos and immediately get an optimized playback experience. The challenge: moving big files reliably from the browser, processing them at scale (transcoding + thumbnails), keeping costs low, and preventing duplicate work when background jobs retry. All of this had to be secure (no public write keys) and snappy in the UI.

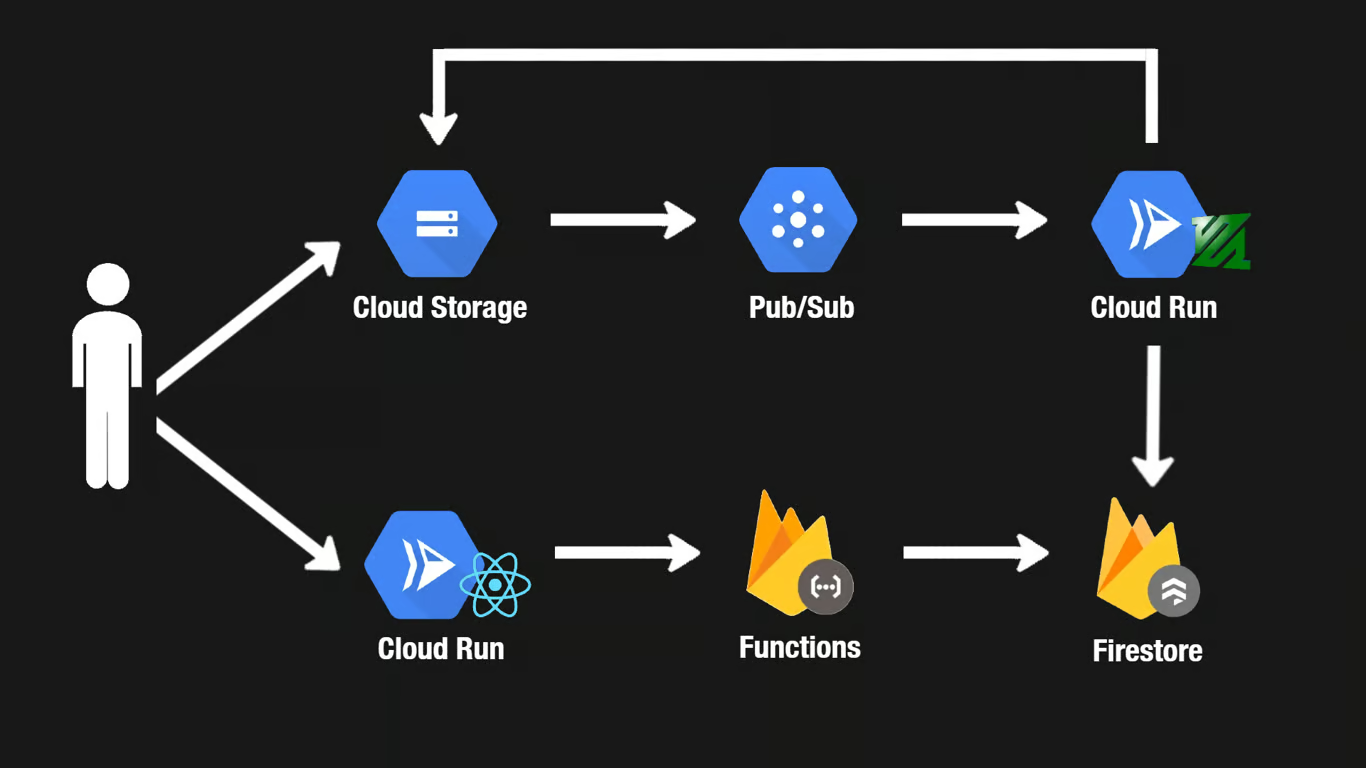

I built a fully serverless pipeline on Google Cloud. The web app (Next.js) authenticates with Firebase and requests a short-lived V4 signed URL to upload directly to a "raw" Cloud Storage bucket—bypassing my servers. A bucket notification triggers Pub/Sub, which pushes to a Cloud Run service. That service uses FFmpeg to transcode to 360p and extract a thumbnail, uploads results to a "processed" bucket, and writes a video document in Firestore. The UI lists processed videos and streams via a short-lived signed URL, so files stay private.

Flow: Next.js (Firebase Auth) → callable function returns signed upload URL → client PUTs to Raw Bucket → GCS notifies Pub/Sub → Pub/Sub push → Cloud Run (FFmpeg) → Processed Bucket + thumbnail → Firestore videos document → Next.js lists & streams via signed read URL.

-movflags +faststart for quicker playback start.